Reddit Subreddit Scraper

Use TexAu’s Reddit Subreddit Scraper to extract posts, comments, and user data from any subreddit—fast and without coding. Ideal for researchers, marketers, and data enthusiasts.

Tutorial

Overview

The Reddit Subreddit Scraper automation allows you to extract and export detailed information about specific subreddits, including their descriptions, subscriber counts, and activity metrics. This tool is highly valuable for marketers, growth hackers, and researchers who want to analyze community data and track subreddit trends. With options for scheduling, exporting to Google Sheets or CSV, and running on cloud or desktop, this automation makes it easy to monitor and analyze Reddit communities.

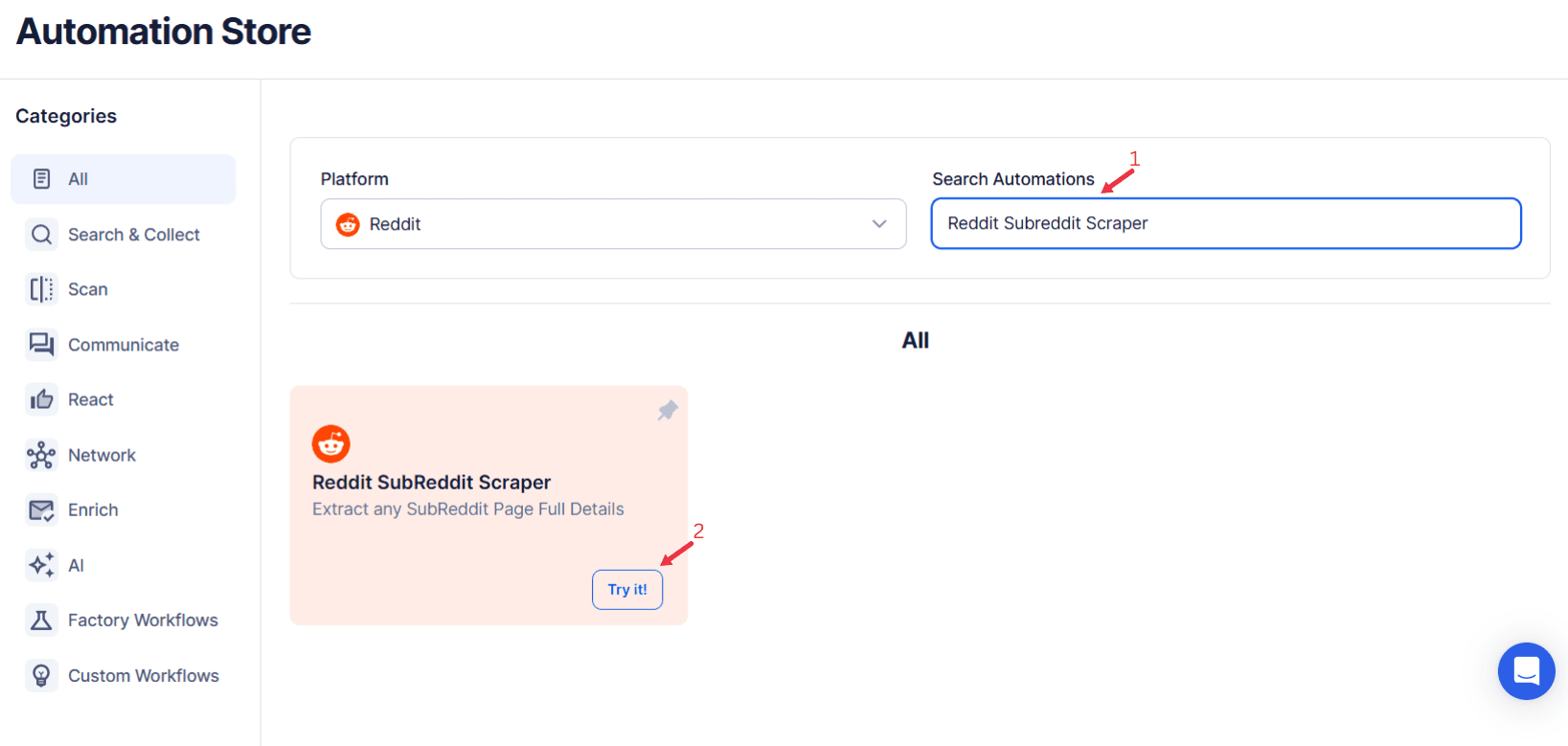

Step 1: Log in to TexAu and Search a Specific Automation

Log in to your TexAu account at v2-prod.texau.com. Navigate to the Automation Store on TexAu. Use the search bar to find Reddit Subreddit Scraper automation.

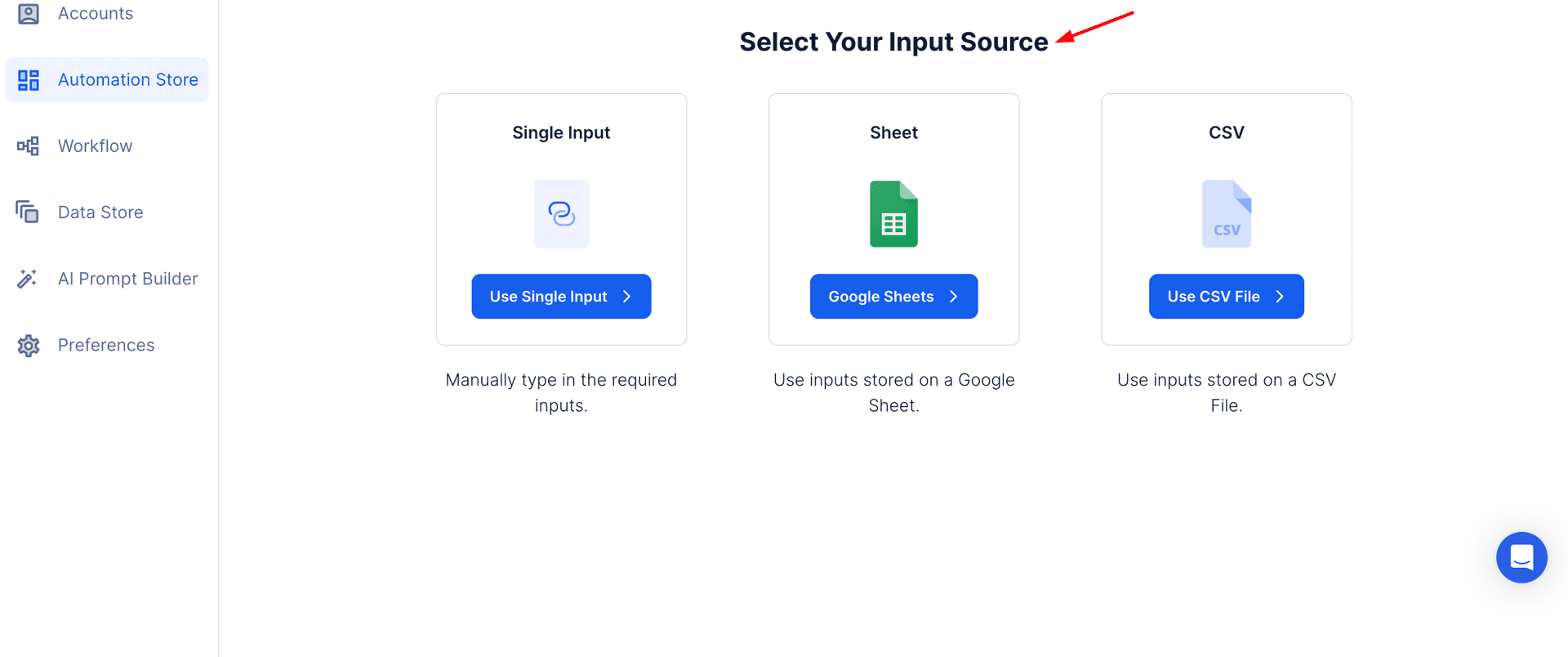

Step 2: Select Your Input Source

TexAu provides multiple options to scrape subreddit data on Reddit. This automation is useful for marketers, researchers, and content creators who want to gather subreddit information for analysis or insights.

Specify the subreddits you want to scrape. TexAu offers the following input methods:

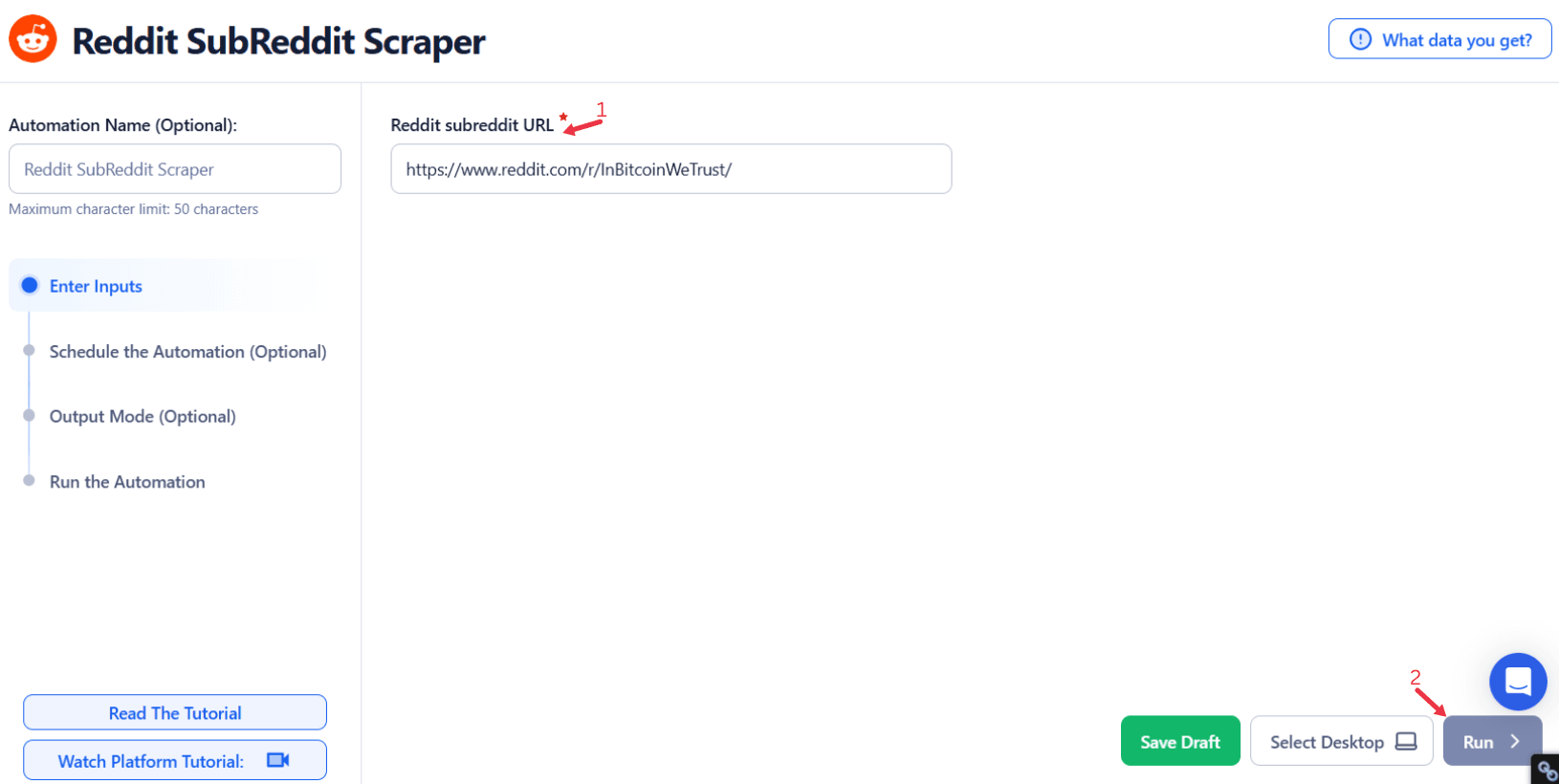

Single Input

Use this option to scrape data from a single subreddit.

- Reddit Subreddit URL: Enter the URL of the subreddit you want to scrape.

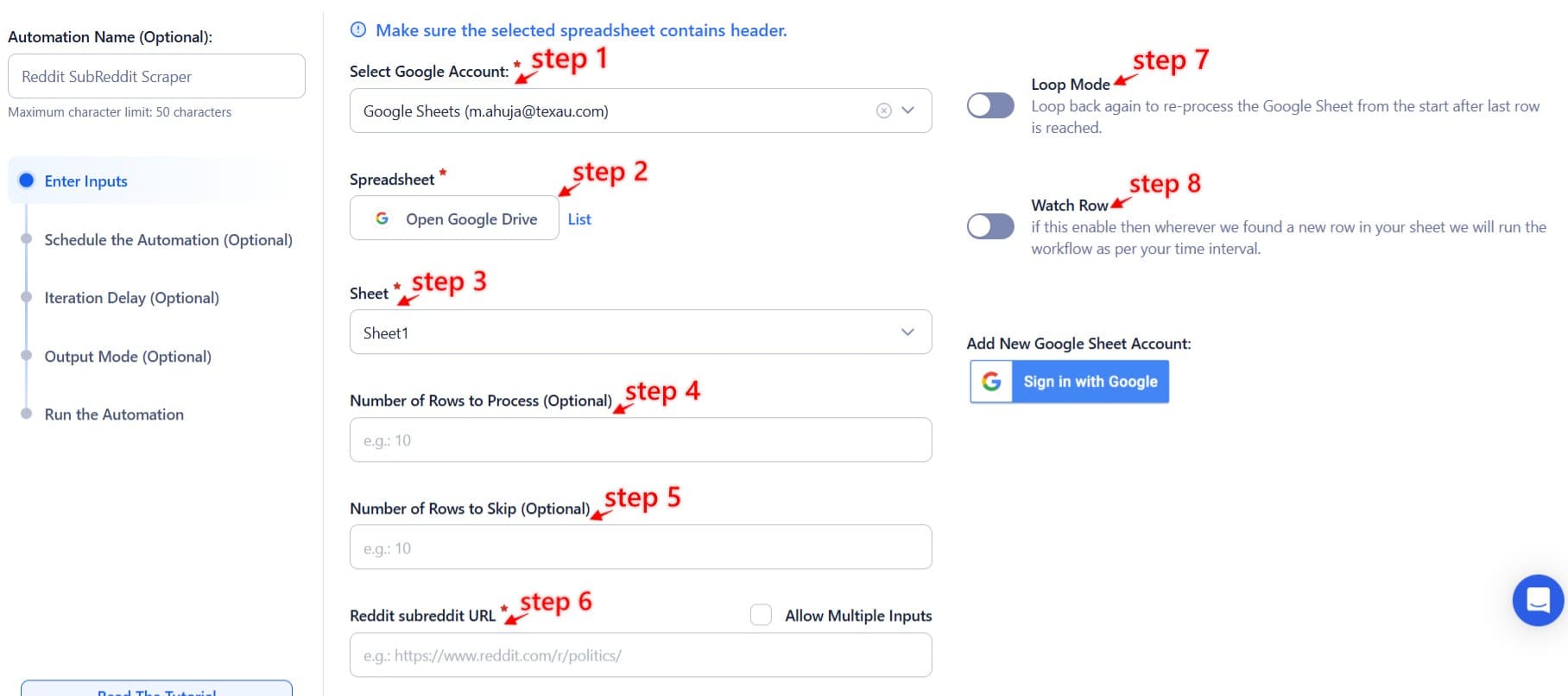

Google Sheets

This option is ideal for scraping multiple subreddits listed in a Google Sheet.

- Connect your Google account

- Click Select Google Account to choose your connected account, or click Add New Google Sheet Account to link a new one.

- Select your spreadsheet

- Click Open Google Drive to locate the Google Sheet containing subreddit URLs.

- Choose the spreadsheet and the specific sheet where your data is stored.

- Adjust processing options

- Number of Rows to Process (Optional): Define how many rows of the sheet should be processed.

- Number of Rows to Skip (Optional): Specify rows to skip if necessary.

- Provide input details

- Reddit Subreddit URL: Ensure the correct column contains the subreddit URLs.

- Optional Advanced Feature:

- Loop Mode: Enable Loop Mode to re-process the Google Sheet from the beginning once all rows are completed. This is useful for tasks that require recurring updates.

Watch Row (Optional)

Watch Row feature enables automated Google Sheet-based workflows by detecting new data entries and triggering workflows instantly.

Define Watch Row settings by selecting a frequency and setting an execution timeframe.

Watch Row Schedule

- None

- Scheduling Intervals (e.g., every 15 minutes, every hour)

- One-Time Execution

- Daily Execution

- Weekly Recurrence (e.g., every Monday and Friday)

- Monthly Specific Dates (e.g., 7th and 23rd)

- Custom Fixed Dates (e.g., April 14)

By default, Watch Row runs every 15 minutes and continues for five days unless modified.

With Watch Row, TexAu ensures efficient, automated task execution.

Process a CSV File

This option allows you to scrape subreddit data listed in a static CSV file.

- Upload the file

- Click Upload CSV File and select the file containing subreddit URLs.

- TexAu will display the file name and preview its content for verification.

- Adjust processing settings

- Number of Rows to Process (Optional): Define how many rows you want to scrape from the file.

- Number of Rows to Skip (Optional): Specify rows to skip, if needed.

- Provide input details

- Reddit Subreddit URL: Ensure the correct column contains the subreddit URLs.

Tip: Use Google Sheets for dynamic or frequently updated lists, and CSV files for static data that doesn’t change often.

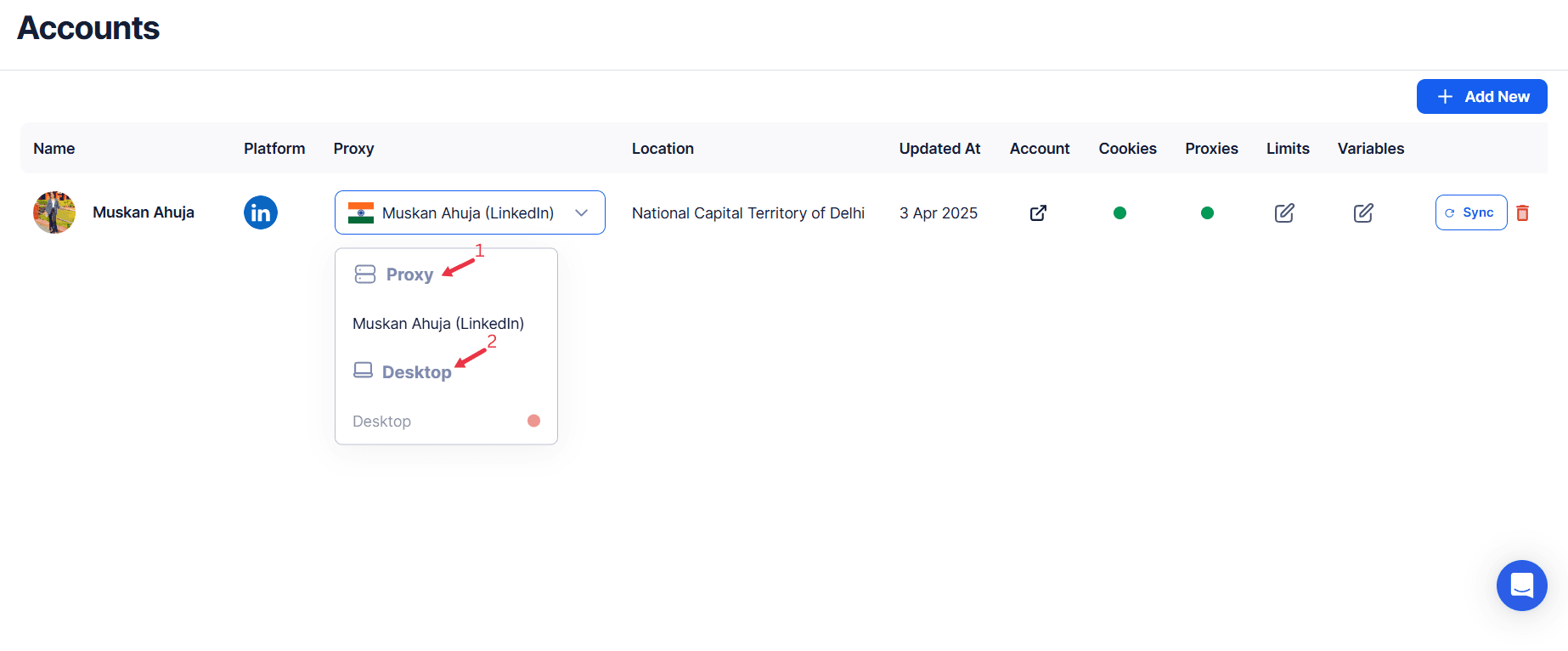

Step 3: Execute Automations on TexAu Desktop or Cloud

- Open the automation setup and select Desktop Mode.

- Click Choose a Desktop to Run this Automation.

- From the platform, select your connected desktop (status will show as "Connected") or choose a different desktop mode or account.

- Click “Use This” after selecting the desktop to run the automation on your local system.

- Alternatively, if you wish to run the automation on the cloud, click Run directly without selecting a desktop.

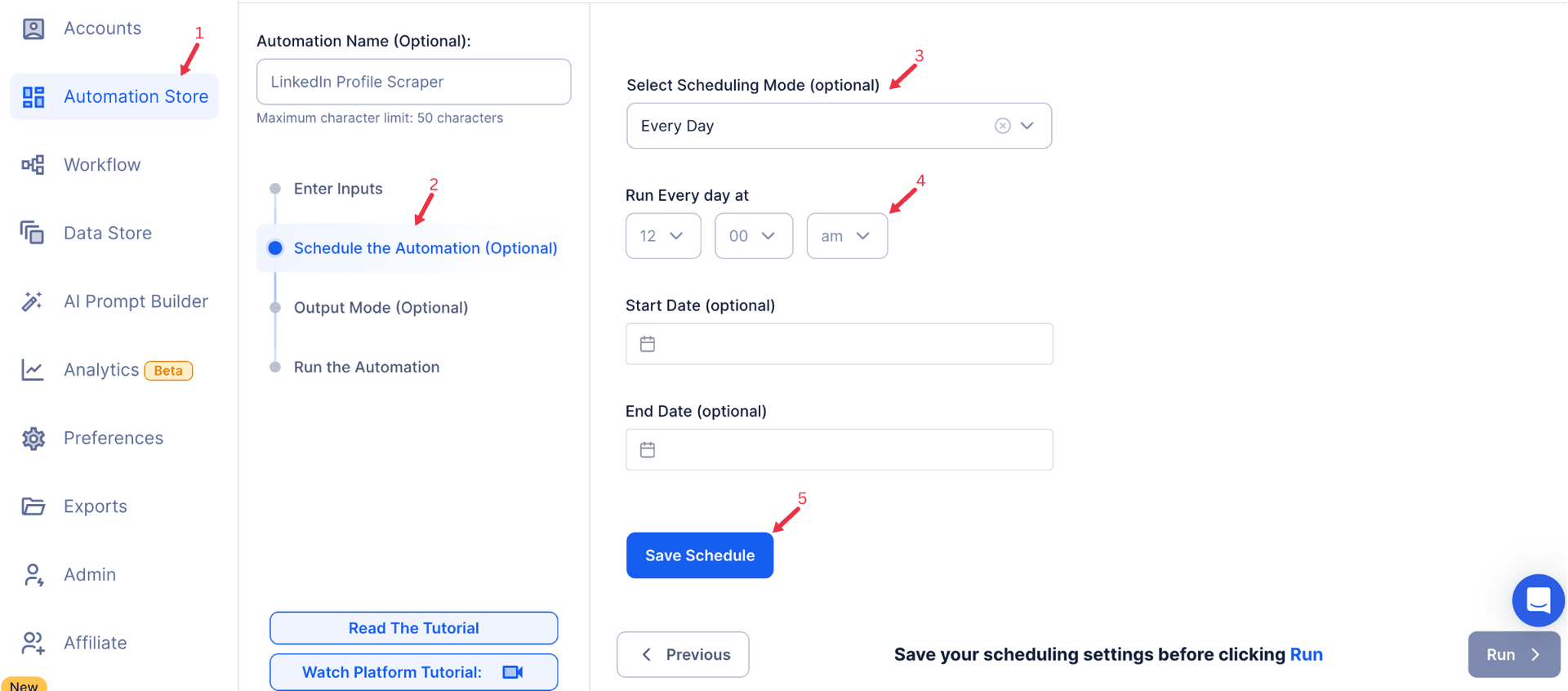

Step 4: Schedule the Automation (Optional)

Set up a schedule to scrape subreddit data periodically. Click Schedule and configure the start time and recurrence frequency:

- None

- At Regular Intervals (e.g., every 6 hours)

- Once

- Every Day

- On Specific Days of the Week (e.g., every Monday and Wednesday)

- On Specific Days of the Month (e.g., the 1st and 15th)

- On Specific Dates (e.g., January 10)

Tip: Scheduling is particularly useful for tracking subreddit growth and activity trends over time.

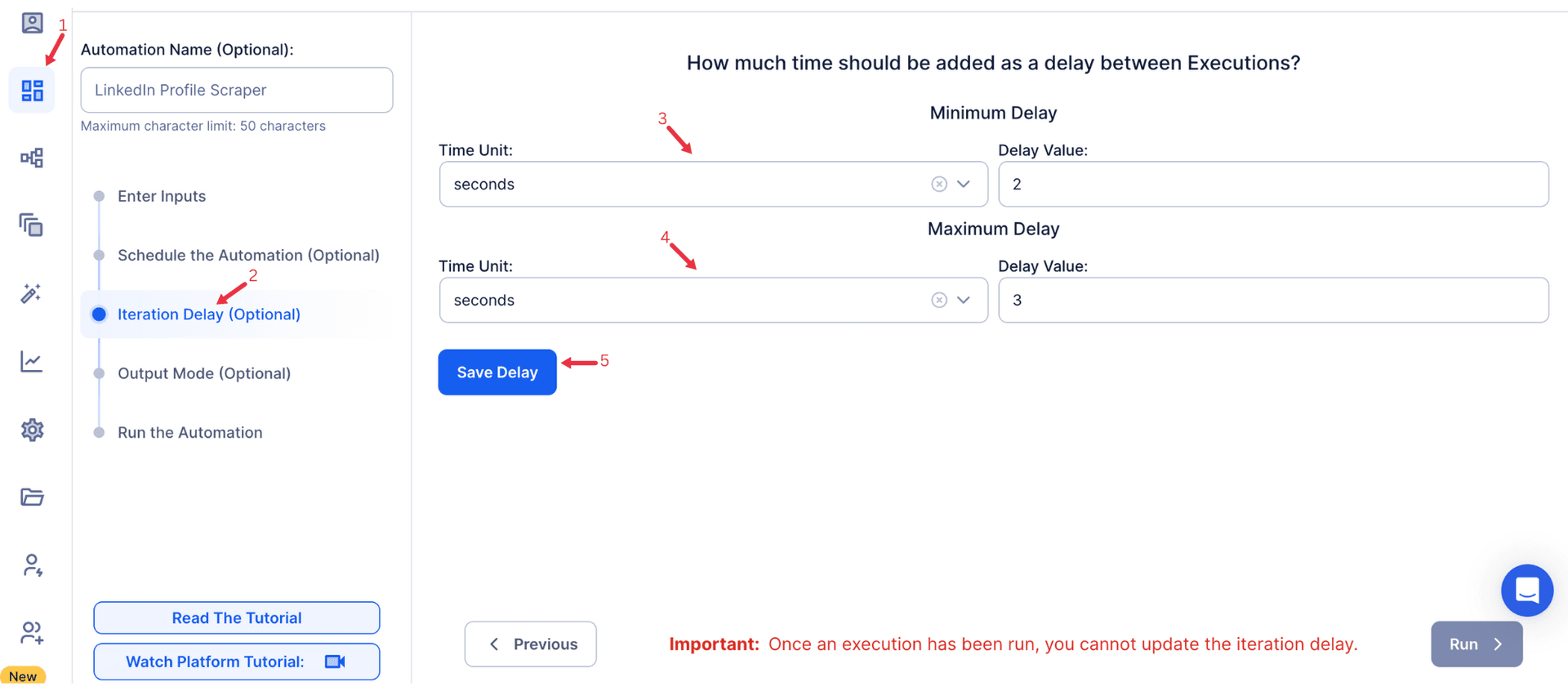

Step 5: Set an Iteration Delay (Optional)

Avoid detection and simulate human-like activity by setting an iteration delay. Choose minimum and maximum time intervals to add randomness between actions. This makes your activity look natural and reduces the chance of being flagged.

- Minimum Delay: Enter the shortest interval (e.g., 10 seconds).

- Maximum Delay: Enter the longest interval (e.g., 20 seconds).

Tip: Random delays keep your automation safe and reliable.

Screenshot Suggestion: Include a screenshot of the Iteration Delay settings, showing fields for Minimum Delay, Maximum Delay, and time units.

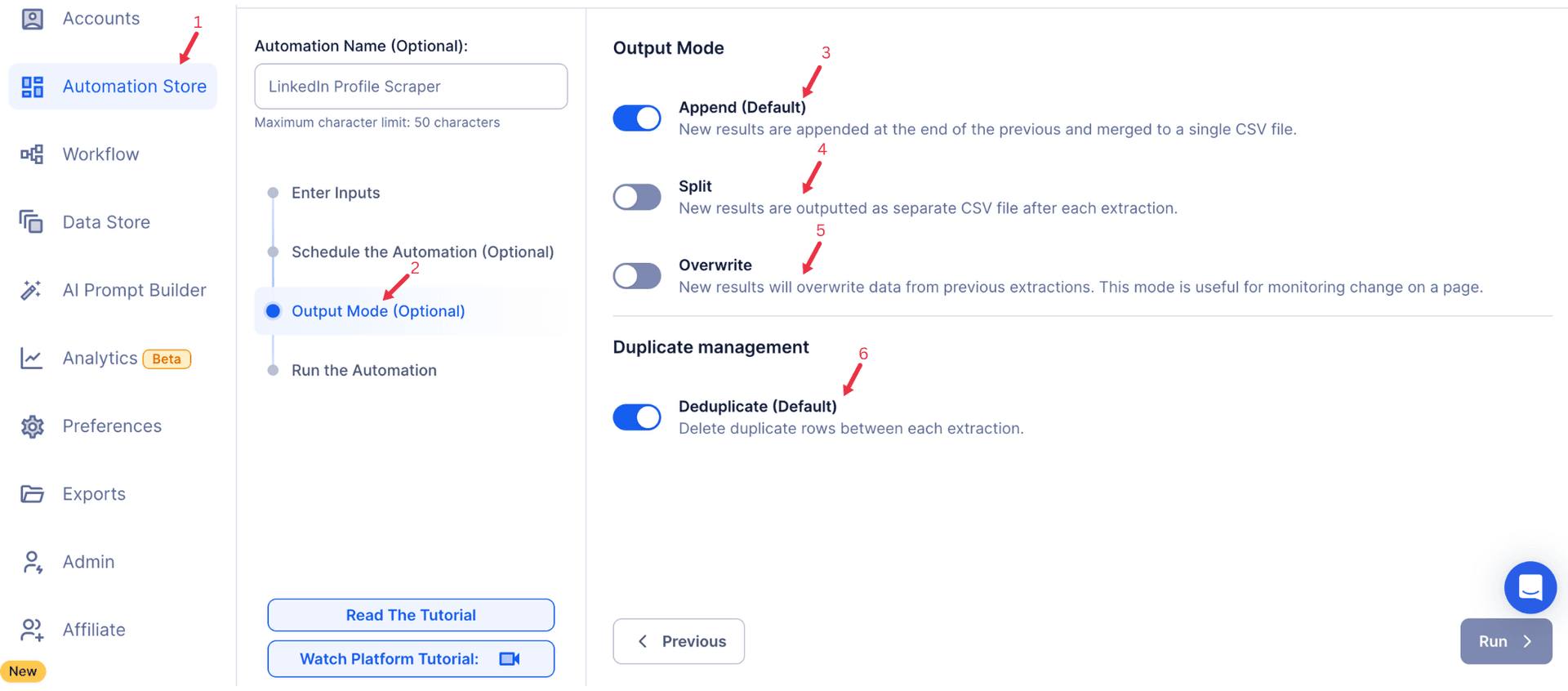

Step 6: Choose Your Output Mode (Optional)

Choose how to save and manage the extracted alumni data. TexAu provides the following options:

Append (Default): Adds new results to the end of existing data, merging them into a single CSV file.

Split: Saves new results as separate CSV files for each automation run.

Overwrite: Replaces previous data with the latest results.

Duplicate Management: Enable Deduplicate (Default) to remove duplicate rows.

Tip: Google Sheets export makes it easy to collaborate with your team in real time, particularly useful for alumni network management and analysis.

Screenshot Suggestion: Show the Output Mode settings with options for Google Sheets, CSV, Append, Split, and Deduplicate.

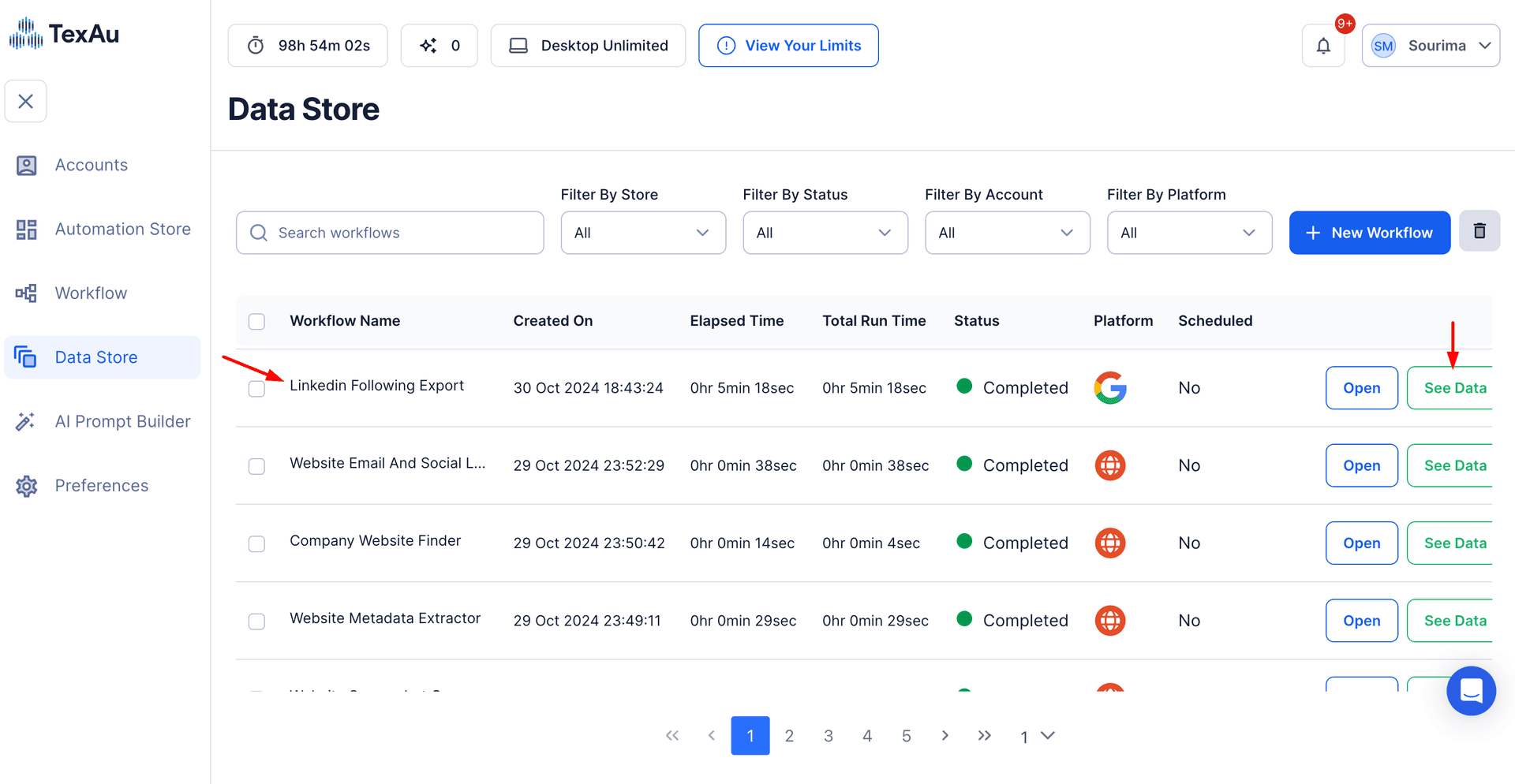

Step 7: Access the Data from the Data Store

Once the automation completes, navigate to the Data Store section in TexAu to view or download the results. Locate the Reddit Subreddit Scraper entry and click See Data to access the extracted subreddit information.

The Reddit Subreddit Scraper automation provides an efficient way to gather detailed subreddit data for analysis, tracking, and strategy development. With features like input customization, scheduling, and seamless export to Google Sheets or CSV, this tool is essential for professionals looking to optimize their Reddit marketing or research initiatives.

Recommended Automations

Explore these related automations to enhance your workflow

Reddit Subreddit Posts Export

TexAu's Reddit Subreddit Posts Export automation extracts detailed data from subreddit posts, including titles, authors, engagement metrics, and timestamps. Perfect for analyzing trends, monitoring discussions, or gathering insights for outreach and content strategies. Ideal for marketers, researchers, and community managers, TexAu simplifies data collection to help you leverage Reddit for impactful audience engagement and growth.

Reddit Subreddit Comments Export

TexAu's Reddit Subreddit Comments Export automation allows you to extract comments from any subreddit effortlessly. Gather data like usernames, comment content, and timestamps to analyze discussions, monitor trends, or identify engagement opportunities. Ideal for marketers, researchers, and community managers, TexAu streamlines subreddit data collection for audience insights, outreach, or content strategies.

Reddit Trends Export

The Reddit Trends Export automation extracts trending posts and topics from Reddit. Track trends using keywords, subreddits, or custom criteria. Export results to Google Sheets or CSV with options for scheduling and cloud or desktop execution.

Reddit Profile Scraper

TexAu’s Reddit Profile Scraper automates the process of collecting user data, posts, karma, and activity from Reddit profiles. No coding or API access required. Ideal for researchers, marketers, and data enthusiasts looking to extract insights quickly and at scale. Save time, stay organized, and get powerful Reddit data with just a few clicks using TexAu.

Reddit Domain Search Export

The Reddit Domain Search Export automation tracks Reddit posts mentioning specific domains. Perfect for marketers and growth hackers, it enables automated tracking, scheduling, and export to Google Sheets or CSV, simplifying brand monitoring and competitor analysis.

Reddit User Search Export

The Reddit User Search Export automation extracts user data from Reddit based on keywords or subreddits. Ideal for marketers and researchers, this tool enables targeted user analysis and insights. Export data seamlessly to Google Sheets or CSV, with scheduling and bulk processing options.

Reddit User Posts Export

The Reddit User Posts Export automation extracts posts made by specific Reddit users, enabling detailed activity analysis and content tracking. Configure easily and export data to Google Sheets or CSV, with options for scheduling and cloud or desktop execution.

Reddit Post Commenters Export

TexAu's Reddit Post Commenters Export automation extracts details of commenters from any Reddit post. Gather usernames, comment content, and engagement data to analyze trends or identify potential leads. Ideal for marketers, researchers, and community managers, TexAu simplifies data collection, helping you leverage Reddit insights for outreach, audience analysis, or content strategy effectively.

Start your 14-day free trial today, no card needed

TexAu updates, tips and blogs delivered straight to your inbox.